Choosing the right mobile app development partner has never been easy. While many companies talk about speed and innovation, do they truly walk the talk? Mobile development is a discipline that should follow a sequence of phases carefully. The real question is whether they are committed to following that process or simply looking for the fastest way to get things done.

Retention data tells a tough story. Research across 31 mobile app categories shows average Day 1 retention at 25.3%. By Day 30, it drops to 5.7%. That decline usually does not happen because of a single small defect, but rather because of weak planning and testing and premature releases.

We have seen this pattern happen with clients who come to us with mobile app development projects after working with other agencies. The app gets launched, and everyone moves on, assuming the hard part is done. A few weeks later, the number of crash reports increases. User reviews turn negative. Feature adoption becomes low. At that point, the cost of fixing mistakes is much higher than doing things properly from the start.

That is why the app development lifecycle has to work as a continuous loop. You develop the app, release it, gather new data and requirements, and start a new iteration. That is simply how it works.

Table of Contents

What Makes Mobile App Development a Lifecycle?

A mobile app development life cycle is the structured, repeatable process of planning, designing, building, testing, deploying, and maintaining a mobile application. Also called the ADLC (application development life cycle), it defines how a mobile product evolves from initial concept through post-launch iteration. When followed as a true cycle rather than a one-time sequence, each release feeds real user data back into the next round of planning.

Stages of the Mobile App Development Life Cycle

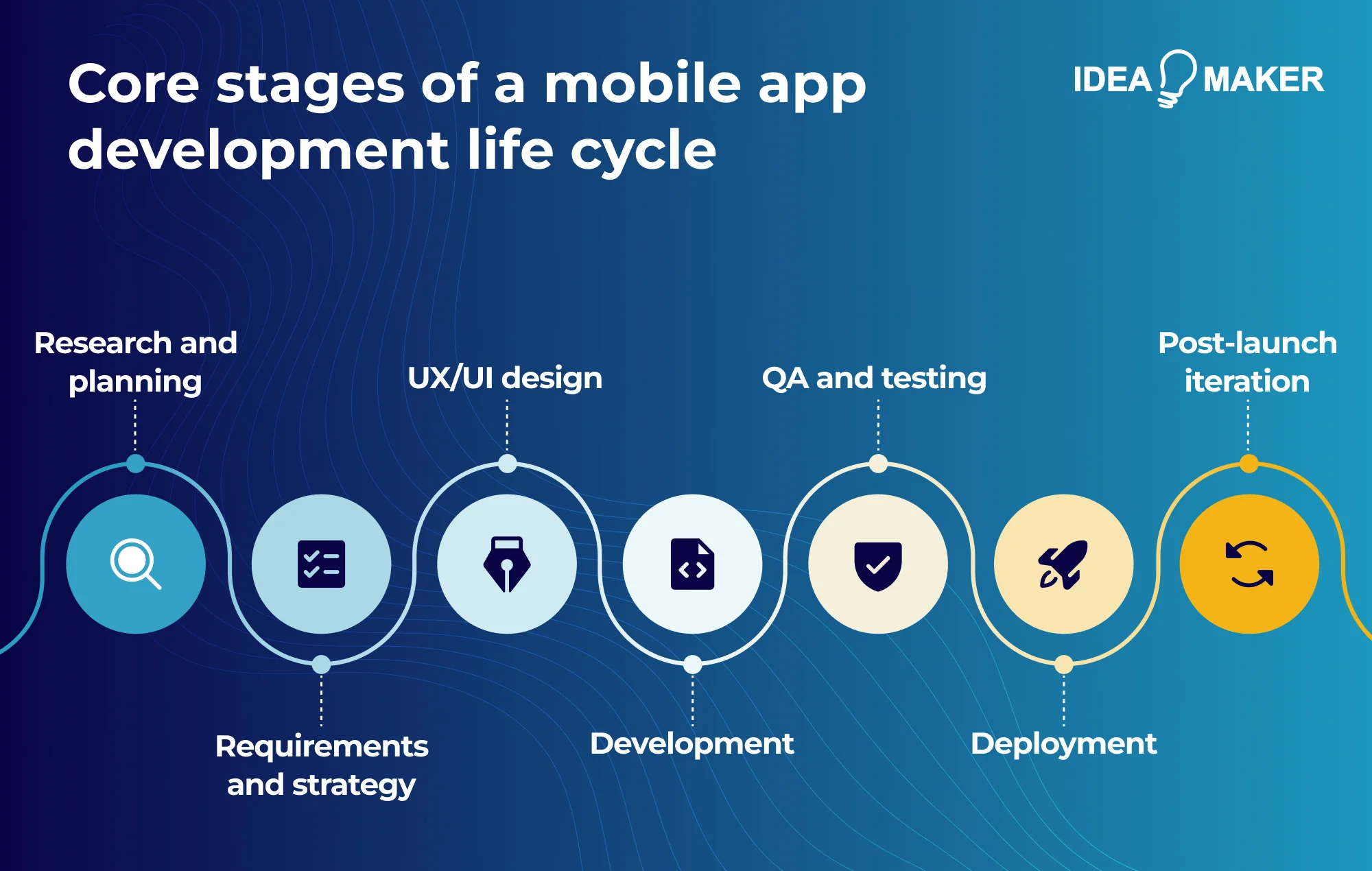

The core stages of a mobile app development life cycle are:

- Research and planning — define purpose, audience, and feasibility

- Requirements and strategy — gather specs, choose tech stack

- UX/UI design — wireframes, prototyping, and user flow mapping

- Development — front-end and back-end build, typically in agile sprints

- QA and testing — functional, performance, and security validation

- Deployment — app store submission and rollout

- Post-launch iteration — monitor analytics, gather feedback, repeat the cycle

Most mobile app development agencies say they “follow a lifecycle,” but in practice, they operate in a launch mode, focused only on shipping the product and moving on. The real test appears after the first production release. Does the team go back to planning with actual usage data?

A linear development model (often called the Waterfall model) treats release as the finish line: requirements, design, development, testing, and deployment are done. This works when requirements are stable and unlikely to change, such as in a regulated government application with fixed specifications. But mobile products rarely operate in stable environments.

A cyclical model treats release as just another stage in an ongoing loop. A feature is planned, built, tested, and released in a limited scope, sometimes as an MVP. After it goes live, the team observes how users interact with it. That feedback informs the next planning cycle. The goal is not to finish a project. The goal is to keep improving a product.

Why Mobile Products Demand a Cyclical Approach

If you build for mobile, you operate in an environment that changes every year. Platform vendors push OS updates multiple times annually. Hardware manufacturers introduce new performance characteristics. APIs and policies shift. An app that performs well at launch can gradually lose compatibility if it does not adapt. When competitors introduce new features, they redefine baseline user expectations.

When you truly follow the life cycle as a continuous loop, you:

- Review analytics and user feedback after every release

- Refine priorities based on real usage data and performance metrics

- Reduce long-term risk through incremental fixes rather than major overhauls

- Protect core architecture while keeping the product competitive as conditions change

This is what separates a development process from a development life cycle — the commitment to sustained iteration, not just a one-time build.

Importance of the Mobile App Development Lifecycle

The MADLC is a structured framework that organizes the work of building an app into distinct, manageable phases. Adhering to it does not guarantee success, but ignoring it almost certainly guarantees waste, rework, and frustrated users.

Reduces unnecessary rework

A clear lifecycle prevents avoidable rework. When you understand the mobile app development lifecycle stages, you make architectural decisions with future iterations in mind. That means fewer rushed fixes and fewer expensive refactors later.

Supports realistic planning

It also makes planning realistic. The app development lifecycle forces you to consider integration testing, release coordination, and monitoring before committing to timelines. Deadlines shift to reflect technical reality rather than optimism.

Prepares for technical risk

The lifecycle of mobile app development also reduces technical risk. It helps you detect integration challenges, performance limits, and scalability concerns early rather than in production.

Improves maintainability

Over time, this discipline improves code quality and maintainability. A well-planned application development life cycle encourages modular design, proper versioning, and consistent releases.

Strengthens cross-functional communication

It keeps everyone informed. Engineers and QAs share visibility into the stages of app development, reducing friction and increasing delivery confidence.

Improves long-term product resilience

Most importantly, it improves long-term product resilience. Continuous iteration helps the product to adapt without destabilizing the core system. You respond to platform and security updates and to changing user expectations without rebuilding everything from scratch.

Mobile App Development Lifecycle vs Development Process

You may hear “lifecycle” and “process” used as if they mean the same thing. They do not. If you mix them up, you risk optimizing execution while ignoring long-term product health.

A development process focuses on how work gets done. It defines how requirements are reviewed, how code is written, how testing is handled, and how releases are approved. It answers practical questions about ownership, execution order, tooling, and governance. When your process is strong, delivery becomes controlled and predictable.

The mobile app development lifecycle focuses on the product itself. It describes how your app moves from idea to release and then through multiple iterations in production. It forces you to think beyond shipping features and consider how the product performs, adapts, and improves over time.

The difference is now easy to understand. Your process manages activities. The lifecycle manages the product journey.

There are situations where a strong process alone may be enough. If you are building a small internal tool with stable requirements and limited external exposure, disciplined execution can deliver reliable results. In that case, clearly defined steps and quality checks may cover most of your risk.

Mobile products rarely operate in such predictable conditions. Platforms release updates regularly, devices introduce new hardware capabilities, user expectations increase, and business priorities change as markets evolve. If you focus only on execution, you may ship efficiently while your product slowly falls behind.

That is why lifecycle thinking matters. When you adopt it, you treat release as one stage in a longer journey. You plan for iteration. You expect change. You prepare to revisit decisions based on real production data instead of assuming your initial assumptions will hold forever.

The strongest approach is to use both concepts together. Your process keeps day-to-day execution disciplined and reliable. Your lifecycle keeps your long-term product direction intentional and adaptable. When you align both, you avoid chaos in delivery and stagnation in strategy.

Common Mobile App Development Lifecycle Models

Different products require different approaches, similar to the models we have discussed below.

Does the Waterfall Model Struggle in Mobile Development?

The Waterfall model follows a strict sequence. Requirements are finalized first. Design comes next. Development follows. Testing happens near the end. After deployment, the focus goes to maintenance.

This approach works when requirements are stable. It provides clarity and proper documentation. In mobile environments, requirements rarely stay fixed for long. Platform updates, device differences, and app store feedback often force changes midstream. Since Waterfall discourages revisiting earlier phases, changes can slow progress and increase cost.

Why Agile Fits Mobile?

Agile breaks development into short cycles. Each cycle delivers something usable. Stakeholders review progress frequently. Feedback guides the next sprint.

In mobile apps, this matters. Real user behavior often reveals issues that planning cannot predict. Faster feedback reduces the risk of building features users do not value.

What About Iterative and Incremental Models?

Iterative and incremental models often appear similar, but they address different delivery strategies within the mobile app development lifecycle.

In an iterative model, we revisit the same feature across multiple cycles and improve it progressively. For example, an onboarding flow may begin with a basic email and password registration. In later iterations, we can introduce multi-factor authentication, add SSO integration, configure reCAPTCHA for bot protection, and refine validation logic based on real production data. Each cycle strengthens the same feature set without expanding overall scope.

In an incremental model, functionality expands in controlled segments by adding new capabilities to the same product. Consider a mobile app that reads body movement data and provides health analytics. The first release may focus only on step tracking and basic activity logging. The next increment can introduce posture detection using device sensors. A later increment may add sleep pattern analysis and advanced health dashboards. Each increment delivers usable functionality while expanding overall system capability.

In practice, you will likely want to combine both approaches as your product scales. They introduce new capabilities incrementally while refining existing features iteratively. It helps the mobile app development lifecycle expand in scope without destabilizing features that are already in production.

Where DevOps Changes the Equation

In many companies, development and operations used to work in separate silos. The DevOps model brings them together. Developers push code more frequently. Automated tests run every time when a developer pushes changes.

Continuous integration checks whether new code works with the existing system. Continuous deployment makes releases smoother and more consistent. Instead of waiting weeks for a release, product updates can be deployed to production in smaller increments.

Monitoring also becomes part of daily engineering work. You can detect performance drops and stability issues before users start reporting them. With this approach, mobile delivery moves faster without sacrificing control. Fewer manual handoffs reduce delays, and automation lowers release risk while keeping deployment speed consistent.

How Do You Choose the Right Model?

There is no single model that matches every mobile application. The right choice depends on where your application stands today.

Early-stage apps often benefit from Agile or incremental models. These approaches support frequent updates.

If your app is more stable and already serving a large user base, you will need stronger governance. In that case, you should combine planning with automation and disciplined release practices to protect stability.

When selecting a model, consider application maturity and how often your requirements change. The model should support your reality rather than force you into a rigid structure.

The Core Stages of the Mobile App Development Lifecycle

The models discussed above help you decide how you organize delivery. Now the focus goes to what you actually move through in each lifecycle iteration.

When we apply the mobile app development lifecycle in practice, we do not treat phases as isolated steps. We move from planning to design. From design to development. From development to testing and release. Then we return to planning with real production data in hand.

In the following sections, you will see how each phase connects to the next and how your decisions in one stage directly affect long-term product performance.

Following phases:

| Phase | Description | Key Deliverables |

| Ideation & Strategy | Define the problem, target audience, value proposition, and business model. | Product brief, competitive analysis, feature priorities |

| Requirements | Capture functional and non-functional requirements, define acceptance criteria. | PRD, user stories, technical constraints |

| UX/UI Design | Research users, create wireframes, build interactive prototypes, and validate with usability testing. | Wireframes, mockups, design system, prototype |

| Architecture & Planning | Select tech stack, define system architecture, plan sprints, and set up CI/CD. | Architecture diagram, sprint plan, CI/CD pipeline |

| Development | Build features iteratively in sprints with code reviews and integration testing. | Working builds, code repository, API integrations |

| Testing & QA | Execute unit, integration, UI, performance, security, and accessibility testing. | Test plans, bug reports, test coverage metrics |

| Deployment | Submit to app stores, manage release rollout, and configure feature flags. | Store listing, release notes, rollout strategy |

| Maintenance & Iteration | Monitor performance, fix bugs, respond to feedback, and ship updates. | Analytics dashboards, update roadmap, hotfix process |

Phase 1: Strategy and Planning – Setting Direction for Each Iteration

Every cycle begins with defining what this release needs to achieve. Planning is not about rewriting the product vision every time. It sets the objective for the current iteration.

At this stage, usually focus on:

- Defining measurable goals for the next release

- Reviewing analytics from previous versions

- Examining user feedback and support tickets

- Identifying technical constraints

- Analyzing bugs reported by users for previous releases, if this is not the 1st milestons release

- Researching similar competitor products

- Going through the product backlog

Usage data plays a central role. Retention rates, crash logs, bugs reported, feature requests, feature improvement requests, and performance metrics show what actually happens in production.

We often come across clients who hire us for project rescue. In those cases, poor initial planning or unrealistic assumptions have already created problems. A feature expected to drive engagement shows low adoption. An onboarding flow that worked in demos produces high drop-off in production. Performance that seemed stable on flagship devices degrades on mid-range hardware once real usage begins.

After reviewing the data, the next step is deciding what to work on. New features, bug fixes, and experimental ideas all compete for limited development time. The plan must align with what your engineers can realistically build within that cycle. When too much is committed up front, pressure builds later in development, and deadlines slip.

Phase 2: Design and Prototyping – Evolving the User Experience

Once priorities are clear, the next step is how your users will actually experience these changes.

An idea only creates value when people can understand it, navigate it, and complete actions without confusion. This phase turns business goals into screens, flows, and real interactions your users will touch every day.

Design rarely works the first time perfectly. We often see clients come to us after a build where the screens technically function but feel confusing or heavy. A small change in button placement can improve conversions. A simplified navigation structure can reduce drop-offs.

Before writing production code, we validate design decisions through practical methods such as:

- Writing user stories that describe specific goals and edge cases

- Running short usability sessions with interactive prototypes

- Reviewing support tickets to understand repeated pain points

- Speaking with active users to understand what they actually care about

Prototypes allow you to test assumptions early instead of fixing expensive mistakes later. When you see how real users move through the app, gaps become obvious.

Consistency also matters. Product milestone releases should maintain consistency without disrupting familiarity. Maintaining shared UI components and design guidelines helps to maintain consistency across releases.

Phase 3: Development and Integration – Building Iteratively

Development progresses in increments. Agile sprints support incremental feature delivery and help to validate progress regularly.

Continuous integration supports codebase evolution. Every commit triggers automated builds and checks. This prevents integration conflicts and protects overall stability.

Your app likely depends on external systems such as:

- Authentication providers

- Payment gateways

- Analytics SDKs

- Push notification services

Managing these integrations requires version control and compatibility monitoring. At IdeaMaker, we have seen how unmanaged third-party updates introduce unexpected production bugs and security vulnerabilities.

Development does not focus solely on feature implementation. Each new feature increases codebase complexity and affects maintainability. Without scheduled refactoring, technical debt accumulates and degrades performance, stability, and release velocity. Reserve part of each sprint for refactoring, dependency updates, and performance tuning instead of focusing only on new features.

Phase 4: Testing and Quality Assurance – Continuous Validation

Testing does not begin after development ends. In mobile development, testing runs alongside implementation.

You can use functional and non-functional testing types to validate multiple dimensions of the application:

- Functional testing to confirm features behave as expected

- Usability testing to detect friction in real workflows

- Performance testing to measure load handling and memory usage

- Regression testing to validate that new changes do not break existing features

- Smoke testing during milestone releases when there’s limited time to do full regression testing

- Security and compatibility testing across devices and OS versions

Automation supports how fast you can release. CI pipelines execute unit tests, integration tests, and end-to-end tests on every build. We have seen that scheduling nightly automation builds to run the full regression suite helps catch integration issues before they reach production.

Test parallelization further reduces validation time. Instead of running one environment at a time, the same test suite executes simultaneously across multiple infrastructure combinations. For a mobile product, this may include different Android versions, iOS versions, device types, screen resolutions, and browser engines. A checkout flow, for example, can be validated in parallel on a mid-range Android device, the latest iPhone, and a tablet form factor.

If you skip continuous QA, defects will slowly build up and damage user trust. Preventing quality degradation requires following the tips, such as:

- Maintaining automated test suites with good coverage to run on every build

- Scheduling nightly full-regression automation runs

- Conducting defect triage sessions with engineering and QA

- Reviewing new JIRA tickets daily and assigning QA for validation and root cause analysis

- Maintaining a dedicated QA defect backlog

- Assigning a severity level to bugs

- Fixing high-severity bugs before approving the GA release candidate

Phase 5: Deployment and Release – Continuous Delivery to Users

The first production release introduces the product to actual users. After the very first release, every other milestone release becomes part of an ongoing release cycle. Each of these milestones may include:

- New features

- Performance improvements

- Bug fixes

- Security patches

Release management becomes important at this stage. You should maintain strict version control and tag every production build properly. Release timing depends on sprint cadence, business priorities, and app store review policies. Android and iOS submission guidelines also affect scheduling and approval windows.

Risk management becomes essential once updates move into production. Because a defect that slips through testing no longer affects a test device, it affects real users.

For that reason, you should avoid pushing updates to everyone at once. Instead, start small with staged rollouts. A new version may go live for 5% of users. Then you should observe errors reported, login success rates, and API errors. If the numbers are normal, the rollout should expand.

In higher-risk releases, we can use a canary approach. In this method, the update goes first to a tightly controlled group before wider exposure. This might include internal staff or users in one region. The goal is to catch defects early before the wider audience ever sees them.

Backend and mobile releases must remain synchronized. API usage, authentication flows, and database schema updates require coordination. We have seen agencies release a mobile update that depended on a new backend API while the backend deployment was still pending. Users immediately began experiencing authentication failures. The issue was not faulty code but release sequencing. Coordinated deployment planning prevents this type of production disruption.

Phase 6: Monitoring and Analytics – Gathering Insights That Drive the Next Cycle

Once deployed, the system enters observation mode. Production data becomes the primary source of decision-making.

You should monitor:

- crash rates

- latency metrics

- memory consumption

- API response times

Engagement indicators such as session length, feature adoption, and retention rates reveal behavioral patterns.

Quantitative data provides measurable outcomes. Qualitative input from support channels, reviews, and feedback sessions gives more information on user experience.

Long-term monitoring like this exposes improvement opportunities:

- It shows underused features.

- It identifies performance bottlenecks.

- It reveals mismatches between expected and actual behavior.

Phase 7: Maintenance and Optimization – Keeping Your App Healthy

After multiple release cycles, maintenance becomes a continuous responsibility. Platforms change on their own schedule. Apple and Google push OS updates. New devices arrive with different screen sizes and chipsets. Security rules tighten. An app that worked perfectly six months ago can start failing on newer versions. Each of these activities affects application compatibility.

Therefore, ongoing maintenance should include:

- Bug resolution

- Performance tuning

- OS compatibility updates

- Testing against multiple devices

- Security patching

- Dependency upgrades

- Refactoring to control technical debt

At IdeaMaker, we often allocate dedicated sprint capacity to maintenance tasks.

Closing the Loop – How Insights Feed Back Into Planning

The lifecycle only works when production insights flow back into planning. Analytics should directly influence the next roadmap discussion rather than remain isolated in dashboards.

In each cycle, you should review:

- Feature adoption rates to decide what to expand or retire

- Performance metrics to identify areas that require optimization

- Bug reports and stability trends to prioritize fixes

- User feedback to detect usability gaps not visible during internal testing

Assumptions set during early planning must be validated against real usage data. When behavior differs from expectations, direction needs to change. That’s how roadmaps should evolve based on measurable outcomes rather than original hypotheses.

This feedback loop keeps the mobile app development lifecycle adaptive. Planning drives release. Release generates measurable data. That data informs the next iteration. This continuous cycle helps a mobile application to remain competitive long after its initial release.

How to Personalize the Mobile App Lifecycle to Your Needs

Your product does not need the same level of process as every other app. A fitness startup launching an MVP does not operate like a bank releasing a regulated mobile banking app. The way you design your app development life cycle should reflect that reality.

A simple internal field-service app may move quickly with lightweight documentation and short iterations. A healthcare app handling patient data requires deeper security reviews, compliance checks, and staged approvals before each release. The complexity of the system and the sensitivity of the data should determine how detailed your lifecycle becomes.

Context also matters.

- Startups often prioritize speed along with product-market fit.

- Enterprises balance governance, integration, and audit requirements.

- Internal apps focus on operational efficiency rather than public scalability.

Iteration speed should match the level of risk involved. For example, a social media feature can be deployed weekly, while even a small update to a fintech payment flow may need to go through multiple review rounds.

The kind of tools and team available to you also play a part in customizing the lifecycle. For a small team that does not have separate QA or DevOps roles, the lifecycle tends to be leaner by default. Developers have to handle all tasks themselves, including running tests and validating deployments. This makes the testing and release phases tightly integrated with development. Such a lifecycle process will have shorter feedback loops, which makes the lifecycle more fluid and iterative.

When you work with a larger organization, you have more options. There is an opportunity to delegate responsibilities, which makes the lifecycle more structured. Testing, security review, and deployment are handled by specialized teams. This introduces formal checkpoints, documentation requirements, and staged approvals between phases. The lifecycle becomes more layered and follows a governance model.

Managing Multiple Lifecycle Iterations Simultaneously

As your product matures, you will rarely work on a single track. While one group prepares a new feature set, another stabilizes the current production version. Managing multiple lifecycle iterations at the same time requires deliberate coordination across app development stages.

Parallel development tracks often separate innovation from stability. For example, a fitness app may run an experimental AI coaching feature in a beta branch while the main branch focuses on bug fixes and performance updates for active users. This approach protects existing revenue while allowing controlled experimentation.

At the same time, you need to balance maintenance work, new feature development, and long-term mobile app modular architecture improvements. Ignoring legacy support slows growth. Ignoring innovation reduces competitiveness. Sustainable delivery depends on allocating capacity across all three without overloading release cycles or degrading production stability.

Key Metrics to Track Throughout the Lifecycle

You should map specific metrics to each phase instead of tracking everything at once.

Product and Business KPIs by Phase

Planning (Phase1)

- Retention rate – Percentage of users who return after a release, showing whether previous iterations delivered value.

- Open defect severity mix – Distribution of L1, L2, and normal issues that influence whether stability should take priority over new features.

- Backlog size vs engineering capacity – Comparison of planned work against available resources to avoid unrealistic commitments.

Development (Phase 2-3)

- Sprint velocity – Backlog items completed in one sprint.

- On-time delivery rate – Planned tickets closed in the scheduled release.

- Defect rate – Issues reported per milestone build.

Launch (Phase 4-5)

- Activation rate – New users completing the first core action.

- Feature adoption rate – Active users using a newly released feature.

- Cohort retention – Users returning within a defined time period.

- Net Promoter Score (NPS) – User willingness to recommend the app.

Growth (Phase 6-7)

- Churn rate – Users who stop using the app within a time range.

- DAU/MAU ratio – Daily active users divided by monthly active users.

- ARPU – Average revenue per active user.

- Referral rate – New users acquired through existing user referrals.

Indicators of App Health, Performance, and Adoption

Business KPIs alone do not reflect technical stability. Operational metrics show whether the application can sustain growth.

- Crash rate and ANR rate – Frequency of unexpected failures.

- API response time – Backend latency affecting user experience.

- Battery and memory consumption – Resource usage across device categories.

- App store rating trends – Movement in public ratings after releases.

These indicators confirm whether the app development stages maintain reliability as features expand.

Metrics That Inform When to Iterate, Optimize, or Pivot

Some metrics demand action from you:

- Low feature adoption often means users do not see the value in the features. It may also be because the feature is not easy to find.

- High churn points to a gap between what the product promises and what users actually experience.

- Stable engagement with slow user growth can mean the product needs a new, clearer positioning.

- A spike in crash rates after a release requires immediate fixes before any new features are developed.

Useful metrics do more than fill dashboards. They guide roadmap decisions. They decide what gets built next.

Role of AI and Automation in the Mobile App Lifecycle

AI is no longer experimental on mobile. It is now a part of everyday engineering tasks. For a deeper look at practical implementation approaches, see our guide on how to integrate AI into your app.

AI-assisted planning and backlog prioritization

Planning traditionally depended on stakeholder input and basic analytics. Today, usage clustering models group user behaviour patterns automatically. Feature request mining tools scan support tickets and app store reviews to detect recurring themes (for example, the same checkout delay issue reported across support emails, Play Store reviews, and social media comments).

We have used behavior segmentation to identify which in-app events correlate with retention after day seven. That insight directly changed the backlog order. Instead of building new features, the team improved a workflow that predicted higher stickiness.

Automated testing and quality assurance

AI improves test coverage in areas humans often miss.

- Visual AI testing detects UI misalignment across device resolutions.

- Flaky test detection tools identify unstable automation cases.

- Log analysis models flag abnormal error patterns before release.

In mobile, where device fragmentation is real, this reduces regression risk.

Predictive analytics for behaviour and retention

User behavior leaves patterns in production data. Session frequency shows how often people return. Navigation paths reveal where they hesitate or exit. Uninstall events often follow repeated friction points. Churn probability models use these signs to estimate who may leave next.

Many e-commerce apps rely on recommendation engines to decide what each user sees. Product feeds change based on browsing history. Recently viewed items influence the next session. This is the same basic idea you see in streaming apps or large online stores.

Health and fitness apps apply similar techniques. Activity data from wearables directs goal setting. Missed workout streaks trigger lighter plans. Consistent performance leads to higher targets.

When product decisions rely on behavioral data, engagement metrics usually improve. So the system adapts based on observed usage patterns.

Intelligent monitoring and anomaly detection

Traditional monitoring relies on static thresholds. AI-based systems learn baseline crash rate, latency range, and API behavior. When deviation exceeds normal variance, alerts trigger automatically.

This is especially useful during staged rollouts.

Automation in deployment and release management

CI/CD pipelines automate build signing, smoke testing, artifact versioning, and rollout. Some systems automatically pause rollout when the automated test pass percentage is below 100% or a predefined tolerance.

When you use AI correctly, it supports your engineers instead of replacing them. It reduces repetitive load and strengthens decision quality at scale.

What Happens When You Skip Phases

| Phase Skipped | Typical Consequences |

| Ideation & Strategy | Building features nobody asked for; unclear product-market fit; wasted development resources; and misaligned team effort. |

| Requirements | Scope creep, endless rework, and conflicting expectations between business and engineering teams. |

| UX/UI Design | Poor usability, high uninstall rates, and costly post-launch redesigns that disrupt the existing user base. |

| Architecture | Technical debt accumulates rapidly; scalability bottlenecks emerge under real-world load; painful rewrites. |

| Testing & QA | Crashes in production, security vulnerabilities, negative app store reviews, and loss of user trust. |

| Maintenance | Declining ratings, compatibility issues with new OS releases, growing churn, and eventual obsolescence. |

How Idea Maker Supports the Full Mobile App Lifecycle

At Idea Maker, we support the full mobile app development lifecycle, not only the build phase. We work with product owners from early planning through ongoing optimization, with a focus on long-term stability and measurable growth.

We have partnered with more than 200 clients across e-commerce, fintech, healthcare, and enterprise SaaS. A 4.9 client rating reflects consistent delivery across multiple lifecycle iterations.

Our services include:

- Strategy and technical feasibility validation

- UX and UI design aligned with measurable user behavior

- Native iOS and Android development

- Cross-platform solutions

- Generative AI development

- Custom software development

- SaaS development

- Web design

- Release management and staged rollouts

- Production monitoring and analytics setup

- Post-launch maintenance

We develop native iOS and Android apps using Swift, Objective-C, Java, and Kotlin. When a project needs cross-platform support, we use React Native or Flutter. We also integrate payment gateways, analytics tools, and monitoring setups based on what the product actually requires.

After launch, our work does not stop. We review performance logs. We track crash reports. We clean up technical debt when it starts slowing down delivery. Each release becomes part of an ongoing improvement cycle. That is how a product remains stable while it continues to grow.

Common FAQs About the Mobile App Development Lifecycle

What is the difference between a lifecycle and a development process?

A development process explains how engineers build software. A lifecycle explains how the product evolves over time. The process is task-focused. The lifecycle is product-focused.

How long does one lifecycle iteration usually take?

It usually goes in 2-4 week cycles. The duration depends on the scope. High-risk changes often take longer.

Can lifecycle phases overlap in Agile development?

Yes. Planning may start while testing is still running for the previous build. The mobile app development lifecycle steps often run in parallel in some projects.

When does the lifecycle actually start and end?

It starts when a user problem is validated. It ends when the application is retired from production. A mobile app development lifecycle diagram usually shows this as a loop rather than a straight line.

How much effort typically goes into post-launch phases?

You will likely spend a significant amount of engineering time after release. You monitor production. You fix production bugs, adapt to OS updates, and continue performance improvements.

How do small teams manage a full lifecycle effectively?

They rely heavily on automation and shared ownership. Developers support testing and releases when there is no dedicated QA.

Final Thoughts about Mobile App Lifecycle

Many apps lose momentum because businesses treat launch as a finish line. In practice, launch is the first real test. Actual users behave differently from test cases. That is when the product begins to reveal its strengths and weaknesses.

Thinking in lifecycle terms changes daily decisions. It direct focus from feature delivery to long-term product health. Continuous improvement becomes routine work. Not a slogan. Regular tuning keeps performance stable. Ongoing cleanup limits technical debt. Small improvements over time are safer than large rewrites.

If there is one practical takeaway, it is this: make iteration part of your operating model.

- Start each planning cycle by reviewing real production metrics.

- Reserve sprint resources for maintenance and refactoring.

- Release changes gradually instead of exposing everyone at once.

- Set priorities based on observed behavior, not internal assumptions.

When you work this way, the product matures steadily. Stability continues to improve while user trust grows. The application remains relevant long after its first release.