AI once felt like a distant concept, but in 2025, artificial intelligence is beyond the experimental phase. It has reached implementation.

Teams across industries are using artificial intelligence to automate decisions, improve accuracy, and reduce costs. Yet for all its promise, the most significant challenges in AI development surface during deployment.

From disconnected data pipelines to mismatched goals between teams, each stage introduces risks that scale quickly.

According to Gartner, only 54% of AI projects make it from pilot to production, often due to gaps in deployment strategy, infrastructure, and cross-functional alignment.

This guide outlines those challenges across the full development lifecycle, highlighting where teams get stuck and how to solve them. From data quality to cross-functional alignment, it provides a clear technical path for organizations building or scaling production-grade AI systems.

Table of Contents

Understanding the AI Development Lifecycle

You can’t build an AI system in a single step. Why? Because each phase solves a different kind of problem. While model training gets most of the spotlight, it’s what comes before and after that defines success.

Think about it. How useful is a high-performing model if the data feeding it is a mess? Or if the infrastructure can’t handle real-time input?

Every decision you make early on, such as what problem to solve, what success looks like, and what data to trust, sets the tone for everything that follows.

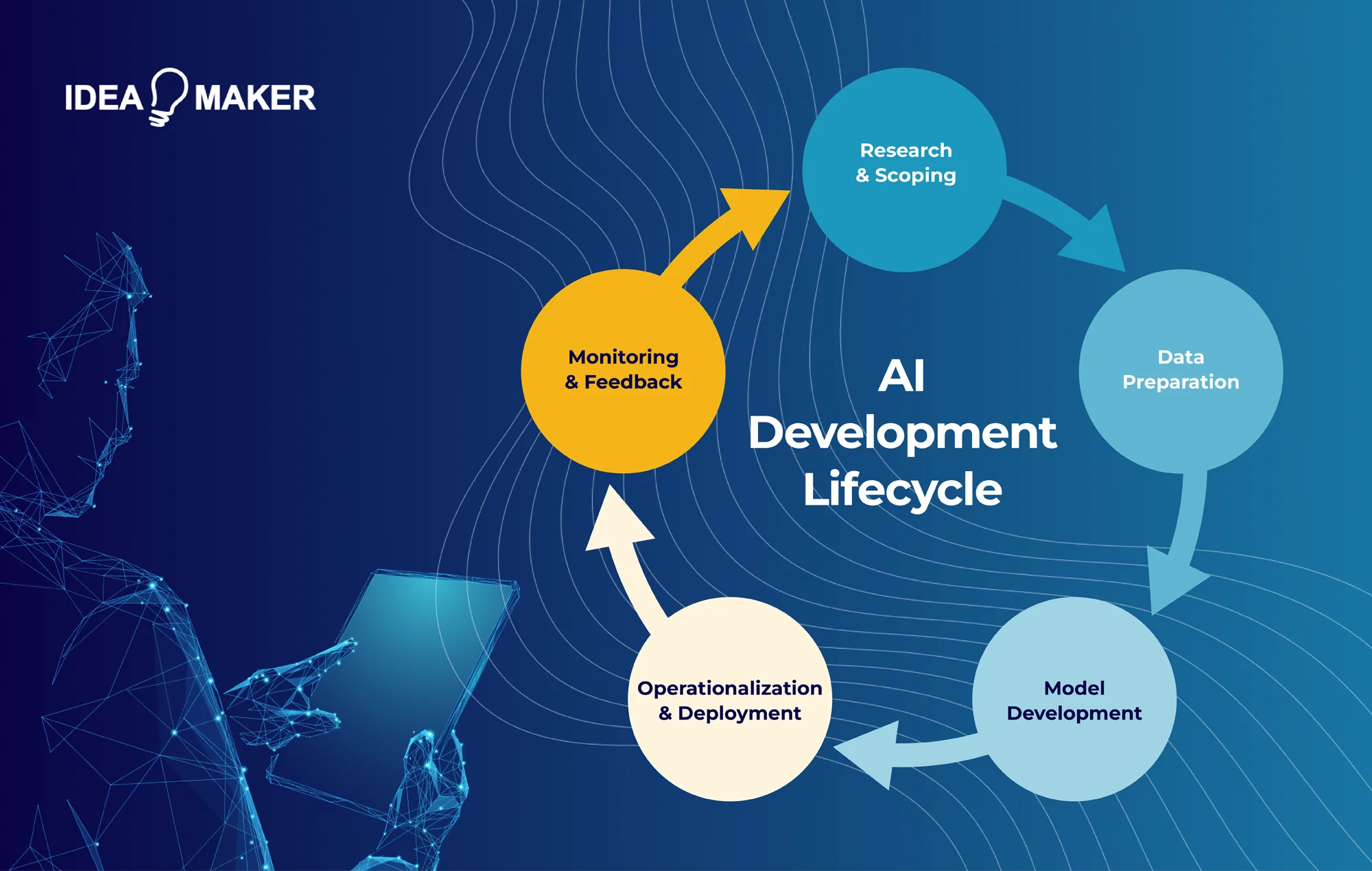

Here’s the reality: AI development follows a lifecycle.

It starts with research and planning, moves through data preparation and modeling, and ends (or continues) with deployment and monitoring.

Miss the sequence, and you introduce AI challenges that compound fast, such as cost overruns, model failure, ethical risks, and stalled projects.

So if you’re asking where things go wrong, the answer usually isn’t at the end. It’s at the start, long before the first line of code is written.

Let’s break down the major stages of this lifecycle and what makes each one risky if mishandled:

Research and Scoping: This is where the entire project direction is set. What problem are you solving? What data do you need? What does success look like from a business and technical standpoint?

These answers must be aligned, not just within the AI team, but across product, data, compliance, and leadership.

If these answers are unclear, expect confusion downstream. Early stakeholder alignment prevents confusion in later stages like data prep and deployment, where downstream teams rely on these foundational decisions. When research is siloed, failures show up long before deployment ever begins.

- Research and Scoping: This is where the entire project direction is set. What problem are you solving? What data do you need? What does success look like from a business and technical standpoint? The answers must be aligned, not just within the AI team, but across product, data, compliance, and leadership. If these answers are unclear, expect confusion downstream. Early stakeholder alignment prevents confusion in later stages like data prep and deployment, where downstream teams rely on these foundational decisions. When research is siloed, failures show up long before deployment ever begins. Misalignment here leads to mismatched objectives, scope creep, or models that don’t support applicable business goals.

- Data Preparation: You might have data, but that doesn’t mean it’s usable. Cleaning, labeling, and transforming are where invisible problems creep in. Unbalanced datasets, missing values, and poor annotation quality quietly corrupt your outcomes. If data is the fuel, most AI projects are running on low-grade gas.

- Model Development: This is the creative lab space: experiment, train, test, repeat. The model might look great in offline validation, but what happens when it meets variability? Overfitting, weak generalization, and lack of interpretability show up fast. The biggest AI problems here aren’t always technical. They’re about not stress-testing for edge cases or aligning the model’s output with practical decisions.

- Operationalization and Deployment: Now comes the transition from theory to production. But building a model is one skill set. Running it at scale is another. This is where model building and operationalization part ways. The first is about performance; the second is about resilience. You need real-time monitoring, retraining workflows, version control, compliance support, and systems that can evolve. Many teams deploy once and walk away. That’s how models drift, decay, and fail silently in production.

Common Challenges in AI Development

The lifecycle is a chain, and any weak link can break this system. Before the first line of code is written, many projects are already at risk. Why? Because the first challenge isn’t technical at all. Instead, it’s strategic. Take a look at the most common AI implementation challenges.

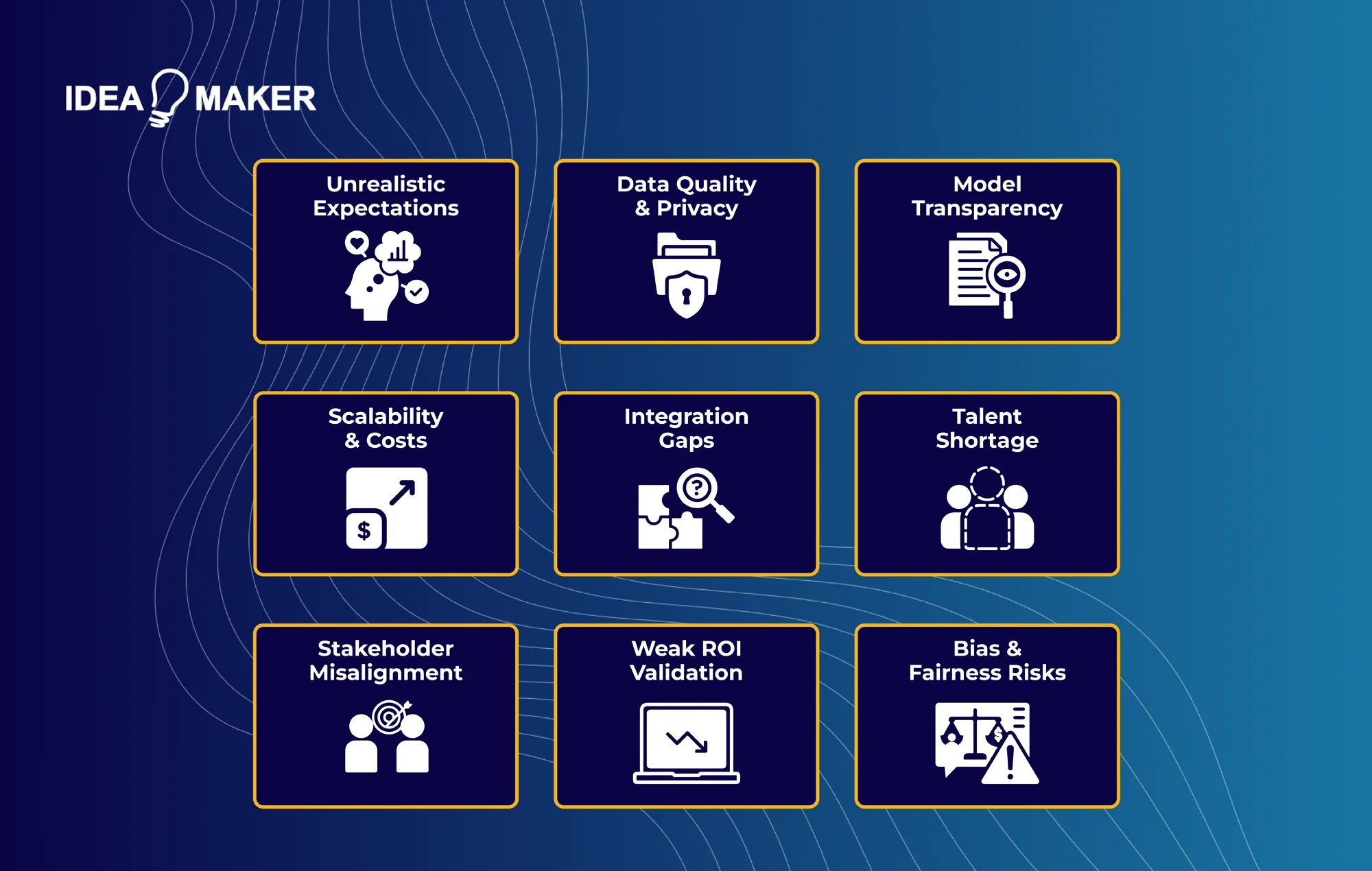

1. Limited Understanding and Unrealistic Expectations

One of the biggest challenges in AI development is conceptual. In fact, McKinsey’s State of AI report shows that 44% of organizations stall due to a lack of executive alignment. This is proof that misunderstanding at the top can derail the entire process.

When business leaders, product owners, or executives don’t fully understand what AI can and can’t do, the entire project starts with the wrong expectations.

You might hear:

- “Can we just feed the model more data and let it learn?”

- “Why isn’t it 100% accurate yet?”

- “Can this go live next quarter?”

These aren’t bad questions. They come from a gap in knowledge. But if those gaps aren’t addressed early, they lead to mismatched goals, poor resourcing, and pressure to deliver results before your foundation is ready.

This is one of the core AI problems: expecting instant answers from a system that’s still being trained, tested, and integrated. Without the right framing, AI seems like magic, which makes any delay feel like failure.

Educating non-technical stakeholders is just as critical as writing code. Teams need shared language around what success looks like, how long things take, and what risks are involved.

Otherwise, technical teams end up working against unrealistic deadlines or building solutions that don’t align with business needs.

If you want your AI to deliver business value, alignment starts with understanding. Not just what the model does, but how it fits into your people, data, and infrastructure.

2. Data Quality, Availability, and Confidentiality

AI models are only as good as the data they learn from. And that’s where many projects hit a wall.

You might have the volume, but is it complete, structured, and labeled properly? In most cases, the answer is no.

Teams work with outdated spreadsheets, noisy logs, or raw customer data that lacks context. These issues lead to unreliable models and wasted compute cycles.

Another major challenge of implementing AI is data privacy. Handling personal or sensitive information introduces legal risk, especially with regulations like GDPR, HIPAA, or local compliance rules.

Standardizing datasets, adding clear annotations, and securing access to high-integrity data are not backend tasks. They’re prerequisites. If your inputs are messy or non-compliant, the rest of your system can’t function properly, no matter how advanced your algorithms are.

3. Model Interpretability and Transparency

Model interpretability refers to how well humans understand the decisions made by an AI model. Transparency is about how clearly the model’s structure, inputs, and reasoning process can be explained and examined. Together, they determine how accountable, trustworthy, and safe your AI system really is.

Why does this matter?

You’ve trained a model that performs well on paper, but can you explain how it makes decisions?

Many AI systems, especially those using deep learning (like neural networks built with TensorFlow or PyTorch), function like black boxes. You feed them data, they return outputs, but the logic in between? Opaque.

This is quite a problem.

Your stakeholders want confidence. Regulators demand clarity, especially in industries like finance, healthcare, and HR, where explainability is a requirement under frameworks like the EU AI Act or the US Algorithmic Accountability Act.

You can’t build trust with a model no one understands. Use tools like SHAP, LIME, or Captum to surface reasoning behind predictions. And design your pipeline, especially during the validation and MLOps phase, to include interpretability as a core metric, not an afterthought.

Because if you can’t explain it, you can’t defend it.

4. Infrastructure Scalability and Cost Management

Getting a model to run is one thing. Keeping it performant as demand grows is another.

As your AI system matures, so do its infrastructure needs. Training even mid-sized models requires significant compute resources such as GPUs, high-throughput storage, and parallel processing.

Without the right architecture, your costs grow faster than your results.

Choosing where and how to host your system, cloud platforms like AWS or Azure, on-prem clusters, or hybrid setups, directly impacts your ability to scale.

But here’s the challenge: many environments weren’t built for AI. Fixed-capacity systems can’t keep up with real-time inference. Manual provisioning slows down deployments.

If your infrastructure can’t adapt to your model’s lifecycle, it will bottleneck progress. That’s why it’s essential to use scalable orchestration tools like Kubernetes, autoscaling policies, and observability tools that track usage by service.

AI projects fail quietly when the system behind them can’t keep up. To overcome this, design infrastructure around modular microservices, implement dynamic scaling with Kubernetes, and monitor workloads continuously to optimize resource allocation and reduce runaway costs.

5. AI Integration, Implementation, and Strategy Gaps

AI integration means connecting a trained model to your actual workflows, tools, and systems so it delivers value. It’s the difference between building an experiment and launching a solution.

A working proof of concept isn’t the same as a working product. And that gap between lab model and live system is where most AI projects get stuck.

The issue usually isn’t just code. It’s fragmentation.

Teams develop isolated models without thinking about how those models will plug into the rest of the business. Or they run into technical debt from legacy systems that don’t support real-time inference, orchestration, or monitoring.

For instance, a team might train a smart chatbot for support tickets but discover during handoff that the backend systems can’t process its outputs, or compliance flags the data usage.

That’s where firms like Idea Maker come in. We help clients transition beyond pilot mode, designing secure, scalable infrastructure with retrainable models, lightweight connectors, and human-in-the-loop checkpoints that actually work in production.

If your models are floating in silos or stalling at integration, the problem isn’t the algorithm. It’s the system around it.

6. Talent Shortage and Cross-Functional Alignment

AI projects need coordination. And that’s where one of the challenges of artificial intelligence pops up.

Suppose your company builds a recommendation engine. You hire strong machine learning talent, but the data team isn’t looped in, and the product managers don’t fully understand the model’s constraints. The result? Delays, rework, and a solution that doesn’t match user needs.

This kind of misalignment is common. Specialized roles like DataOps engineers, ML engineers, and AI product managers are in high demand, but rarely integrated into a unified workflow. Engineering, product, and business teams work in parallel, not together. Communication breaks down. Incentives differ.

AI requires a shared understanding of objectives, data access, deployment timelines, and business impact. Without strong collaboration, progress slows and outcomes suffer.

Hiring more people doesn’t solve it. What matters is how well your teams align, from planning through deployment.

7. Misalignment Between Stakeholders and AI Teams

Challenges in AI development often begin with human disconnects, not technical ones. When business teams want immediate success and AI teams focus on precision, the gap creates bottlenecks almost immediately.

You’ve probably seen it: the business wants better customer retention, but the data science team ends up optimizing for click-through rates. No shared KPIs, no shared success.

This is one of the most frustrating AI problems because it’s not caused by bad code. It’s caused by poor translation between priorities.

Product leads may not understand model limitations, while data scientists may lack business context.

Suppose you’re deploying an NLP model for customer support. The developers focus on accuracy. Meanwhile, the ops team is waiting for call resolution metrics. The result? A model no one knows how to evaluate.

You can’t align AI with strategy unless both sides speak the same language and define value the same way.

8. Weak Business Case and ROI Validation

You can’t fix what you don’t track. It’s one of the most common challenges in AI development. AI teams ship models, but without business metrics tied to them, they can’t prove impact.

This isn’t rare. A 2024 MIT Sloan study found that only 26% of AI adopters have a well-defined ROI framework in place.

Everyone talks about models. Few track their actual outcomes.

Too often, success is measured by accuracy alone. But what does 92% accuracy mean in revenue? In churn reduction? In time saved?

Challenges of implementing AI get worse when results don’t translate into measurable business outcomes. Microsoft once piloted an AI model to optimize energy consumption in its data centers. The model worked, reducing cooling costs by 10 to 15% but only after the team tied predictions directly to infrastructure changes and energy KPIs.

Without that operational alignment, the project risked being shelved like many AI experiments that fail to prove ROI. If your model’s impact isn’t visible in the same dashboards your executives use, it doesn’t matter how accurate it is in a Jupyter notebook.

9. Bias, Fairness, and Ethical Risks

Bias in AI refers to systematic errors in a model’s predictions caused by unbalanced data, assumptions in algorithms, or societal inequalities encoded into the development process.

Let’s say you train a hiring model using past recruitment data from a company where most hires were men. The model may learn to favor male applicants, not because they’re more qualified, but because the data suggests that’s what success looks like. That’s data bias as the system mirrors and amplifies past discrimination.

One of the most difficult challenges of implementing AI is recognizing this biased outcome even when it’s technically working.

When training data reflects historical inequalities, the outcomes can unintentionally reinforce bias. That’s how credit scoring models reject qualified applicants, or facial recognition tools perform worse on darker skin tones.

These AI issues are legal, reputational, and deeply human. Regulations around AI fairness are tightening worldwide, from the EU AI Act to the US Algorithmic Accountability Act. Meanwhile, the NIST AI Risk Management Framework offers a structured approach to identifying and mitigating bias early in the AI lifecycle. It encourages proactive identification and mitigation of issues like bias, security gaps, and misuse, even before deployment.

But governance is still murky. Who’s responsible when an algorithm causes harm? The developer? The business? The data?

To reduce bias, build diverse datasets, regularly audit outputs, and involve humans in critical decision loops. Also, document your model assumptions, test across different demographic groups, and ensure your evaluation metrics reflect real-world impact instead of just accuracy on paper.

At Idea Maker, we’re equipped to support fairness in AI systems, from bias mitigation strategies during training to human-in-the-loop safeguards post-deployment. We also guide clients in creating clear accountability frameworks, ensuring these principles aren’t an afterthought.

Fairness is a requirement for long-term trust. If your system can’t explain itself or justify its decisions, it won’t survive scrutiny.

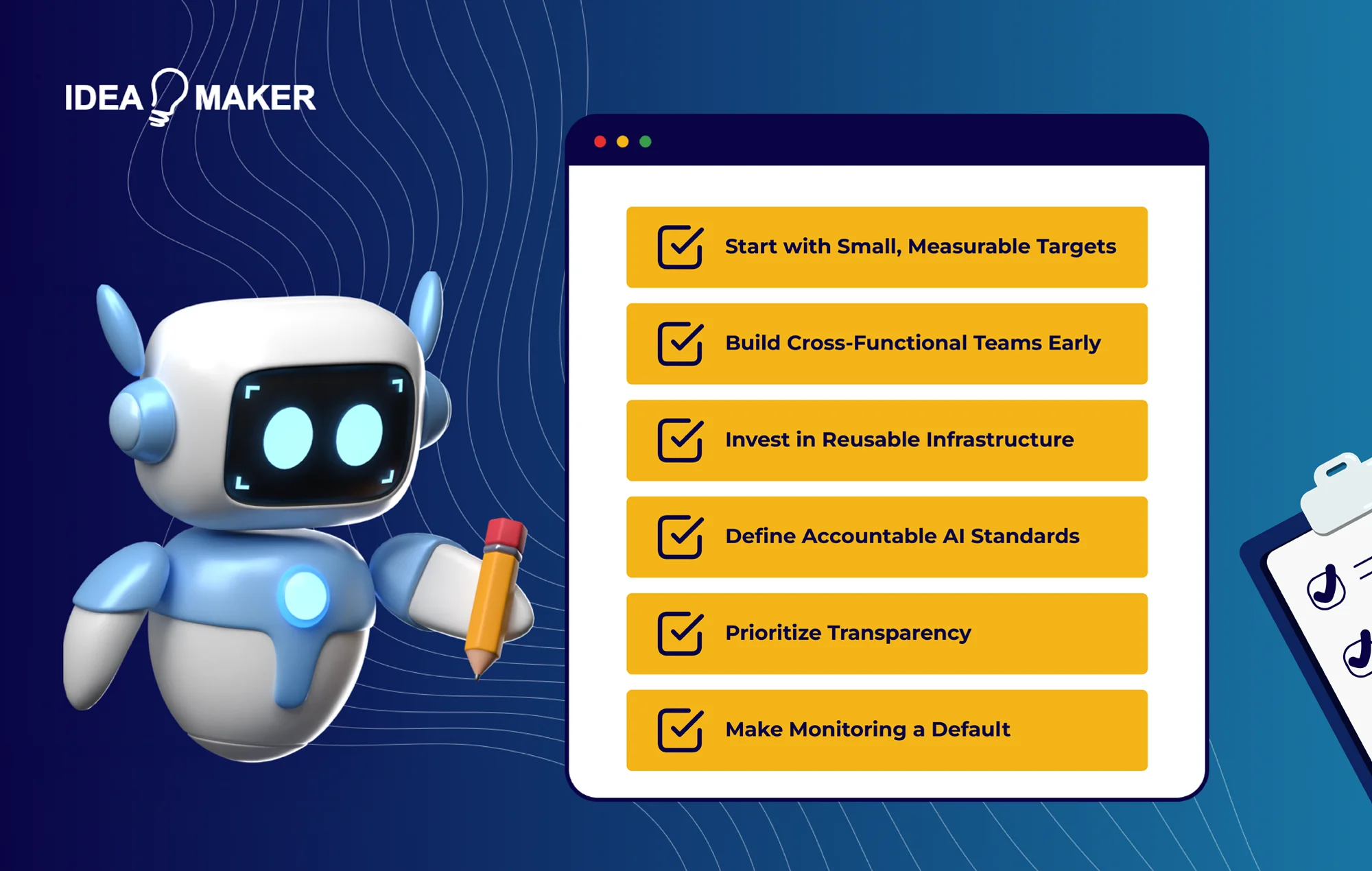

Best Practices for Navigating AI Development Challenges

Most challenges in AI development don’t stem from the algorithm; they come from everything around it.

Planning, communication, handoffs, and systems design introduce complexity that compounds over time.

If your last AI rollout stalled or never made it to production, you’re not alone. These practices don’t guarantee success, but they reduce the failure points that most teams underestimate when overcoming the challenges of AI.

Start Small With a Target You Can Actually Measure

Start with a clear, bounded use case like automating ticket routing or cutting review time by 30%. Choose a target with traceable data and a visible operational impact. Vague wins like “better predictions” won’t survive budget reviews.

Build Teams That Know Each Other

One of the biggest AI implementation challenges? Teams building in parallel, never in sync.

You need data engineers, backend, infra, and compliance in the room, early. Not after the model’s trained. Otherwise, you’re shipping tech into a system that’s not ready to use it.

Invest in Things You Can Reuse

If you’re rewriting every ETL every time, you’re already behind.

Build once, deploy often.

A shared feature store, versioned data inputs, or model registry saves hundreds of hours down the line.

Define What “Accountable AI” Looks Like for You

If your model fails, who owns the fix?

Auditability means more than logging errors. It means knowing who trained what, when, with which data. Compliance teams shouldn’t need a data science degree to follow the chain.

Don’t Build Models No One Understands

When things break, and they will, somebody has to explain why.

Tools like SHAP or EBMs don’t just add transparency. They give product and legal teams language to defend outcomes when models affect people.

Make Monitoring a Default, Not an Afterthought

Every model drifts. What matters is how fast you notice. Set up alerts for input shifts, latency spikes, or rising false positives.

Build a retraining loop before you need one. Production is maintenance.

Prove You Moved a Business Needle

High AUC? Great.

But unless that translates into reduced churn, fewer hours spent reviewing edge cases, or improved customer conversions, no one outside your team cares.

Connect every model output to an actual business result. Otherwise, it’s just math on a server.

Overcoming AI Challenges Through Strategic Collaboration

You’ve seen how implementation hurdles show up across data, infrastructure, people, and processes. But what happens when your internal team hits a wall? When expertise runs thin or systems feel misaligned?

That’s where strategic collaboration steps in.

Whether you’re scaling a proof of concept, fixing model drift in production, or validating results under tight regulations, external partnerships can unlock the missing capability or perspective your team needs.

Agencies like Idea Maker support companies through this exact journey, merging technical depth with systems-level thinking.

Here’s what that looks like in practice:

Partner With AI-Focused Agencies and Research Labs

When internal teams lack bandwidth or deep machine learning expertise, progress loses momentum.

Partnering with AI-focused agencies or academic labs can fill those gaps quite fast. These partners bring specialized knowledge in model tuning, edge-case validation, and scalable deployment pipelines.

Technically, this collaboration gives you access to optimized model architectures (like Vision Transformers or RNNs), cutting-edge toolkits (such as Hugging Face, PyTorch Lightning), and workflows aligned with MLOps standards.

Rather than reinventing the wheel, your team benefits from proven experimentation frameworks and reproducibility setups.

For instance, Pfizer worked with AI labs to simulate and optimize clinical trial scenarios during COVID-19 vaccine development. This reduced modeling time and accelerated safe deployment timelines.

Build Internal-External Hybrid Teams for Innovation

Innovation stalls when technical and domain expertise live on opposite sides of the org chart. Hybrid teams where internal leads pair directly with external ML practitioners break that cycle. This structure accelerates alignment across model design, infrastructure, and constraints.

This means embedding experienced contractors or agency engineers who know how to handle feature stores, retraining triggers, and containerized deployments while your internal team drives the product narrative.

Together, they can spin up modular ML pipelines using tools like Kubeflow, MLflow, or SageMaker, without forcing architecture decisions in isolation.

Take Adobe’s Firefly for example. It wasn’t built by keeping AI research in a separate lab. The team embedded external researchers directly into their Creative Cloud development track. That meant model outputs could be stress-tested against brand guidelines and production-ready design tools, before launch, not after.

Leverage External Governance Frameworks and Tooling

Internal checks aren’t enough when your models are headed into regulated, high-stakes environments. Silent model failures, whether due to drift, bias, or overfitting, can go undetected until they become business liabilities. That’s why external tooling is no longer optional.

To strengthen oversight, teams are integrating frameworks like Google’s PAIR, IBM’s AI Fairness 360, or Fairlearn directly into their CI/CD pipelines.

These tools extend internal validation efforts by testing for fairness, explainability, and edge-case resilience, especially when paired with interpretability methods like SHAP, LIME, or EBMs.

Suppose you’re deploying a loan risk model in the EU. A financial services company ran pre-launch audits using Fairlearn and caught skewed false-negative rates across gender segments. That early alert helped them reweight training data, re-tune thresholds, and ship a model that passed both internal QA and GDPR-aligned fairness benchmarks.

Involve Domain Experts from Day One

Even the most sophisticated AI model will fail in production if it solves the wrong problem. Involving domain experts during problem framing, feature selection, and evaluation metric design ensures relevance from the start.

Practically, this means embedding domain SMEs (doctors in health tech, analysts in finance) into agile sprints, requirement gathering, and even data annotation stages. This cross-talk helps align system outputs with what actually matters: domain-validated outcomes.

For example, in the energy sector, a predictive maintenance model built without operator input would misinterpret safe vs. unsafe conditions. If field technicians join the loop, feature extraction is restructured, improving recall and preventing false downtime alerts.

Maintain Open Feedback Channels Across Teams

Planning, communication, and handoffs introduce cumulative delays in AI implementation. Without structured communication between product owners, ML teams, and business stakeholders, model deployment becomes reactive and error-prone.

Solve this by implementing bi-directional feedback loops using shared dashboards (via Grafana, Power BI), model monitoring tools, and structured review cycles at every ML lifecycle stage, from data curation to post-deployment evaluation.

How Idea Maker Agency Solves AI Development Challenges

When your workflows sprawl across disconnected systems, manual approvals, or legacy code, scaling AI becomes more than a technical problem.

We’ve seen it firsthand.

That’s why our AI development services focus on designing scalable, hybrid architectures that fit into your current workflows.

Whether you rely on spreadsheets, outdated CRMs, or rigid approval chains, we don’t ask you to rebuild everything. Instead, we build around it, layering machine learning where it helps most, and using automation to connect what’s already working.

Our systems are grounded in ethical AI governance from day one, with audit-ready pipelines, explainable models, and domain-specific fairness checks baked in.

And because models don’t stop at deployment, we bring robust MLOps tooling, retraining workflows, version control, and feedback loops so your system improves over time.

You get AI you can trust, that your team can maintain, and your stakeholders can understand.

FAQ: Solving Common AI Development Challenges

What is the biggest challenge in AI development today?

The biggest challenge in AI development is bridging the gap between model performance and business outcomes. Many organizations face delays because their AI models lack integration, scalability, or measurable ROI.

How do you make AI models more explainable?

To improve AI model explainability, use tools like SHAP, LIME, and Explainable Boosting Machines (EBMs). These tools show how input features influence predictions, helping both technical and non-technical stakeholders understand model behavior.

What tools help manage machine learning pipelines at scale?

Popular MLOps tools include MLflow, Kubeflow, SageMaker, and TFX. These platforms support the full ML lifecycle, including model training, deployment, version control, and continuous monitoring at enterprise scale.

How can startups without AI experts start safely?

Startups can begin AI development by using no-code AI platforms, pre-trained models, or working with external AI consultants. Focus on low-risk use cases like customer support routing or simple data classification to build momentum.

What’s the cost of deploying AI at scale?

The cost of deploying AI at scale depends on infrastructure, use case complexity, and compliance requirements. Small pilots can start under $50,000, but enterprise-level systems with full MLOps support can exceed $1 million.

How do you calculate ROI for an AI solution?

AI ROI is measured by comparing operational costs before and after deployment. Look at metrics like time savings, increased accuracy, reduced errors, or improved conversions to validate value.

What makes AI trustworthy for end-users?

Trustworthy AI requires transparency, human oversight, and ethical auditing. Explainable outputs, consistent performance, and compliance with privacy laws build user confidence in AI systems.

How does external collaboration improve AI outcomes?

External collaboration brings in outside expertise, proven tools, and faster results. By partnering with the right teams, you build more reliable AI without slowing down your roadmap.

Wrapping Up

If your AI projects feel stuck between proof-of-concept and production, you’re not out of options. The challenges in AI development aren’t solved with more dashboards or another off-the-shelf platform. They’re solved by building systems that match how your business actually works.

That means identifying the gaps: teams working in silos, tools that don’t talk to each other, and workflows too rigid to adapt.

AI isn’t about overengineering the obvious. It’s about reinforcing the decisions, tasks, and handoffs that already drive value, just with more speed, context, and accuracy.

Start with a specific pain point. Design a small win. Build from there.

At Idea Maker, we help companies move past pilot fatigue and into real impact. If you’re ready to make AI part of your core operations, not just an experiment, we’re ready to help you do it right. Get in touch with Idea Maker to build automation that fits your workflow, scales with your goals, and delivers measurable results from day one.